| Version 44 (modified by , 14 years ago) (diff) |

|---|

Parallel-DT

- Short Name: Parallel-DT

- SVN: https://svn-batch.grid.pub.ro/svn/PP2009/proiecte/Parallel-DT

- Team members: Eremia Bogdan, Andrei Minca, Alexandru Sorici

- Project Description: Classification is an important data mining problem. One of the most popular algorithms used for classification purposes are decision trees (DT). Since datasets that are used in data mining problems are usually very large, computationally efficient and scalable algorithms are highly desirable. Thus, the project's goal is to parallelize the decision tree inference process. A shared memory programming model using OpenMP is being considered for this task.

- Project Presentation: https://ncit-cluster.grid.pub.ro/trac/PP2009/attachment/wiki/Parallel-DT/Parallel-DT.ppt

- Project Implementation Details: Details

Serial DT process

Most of the existing induction-based algorithms, also C4.5 that is analysed on this topic, use Hunt's method as the basic algorithm. Here is a recursive description of Hunt's method for constructing a decision tree from a set T of trainning cases with classes denoted {C1, C2, C3, ..., Ck} :

- Case 1 T contains cases all belonging to a single class Cj. The decision tree for T is a leaf identifying class Cj.

- Case 2 T contains cases that belong to a mixture of classes. A test is chosen, based on a single attribute, that has one or more mutually exclusie outcomes {O1, O2, O3, ..., On}. note that in many implementation n is chosen to be 2 and this leads to a binary decision tree. T is partitioned into subsets T1, T2, ..., Tn, where Ti contains all the cases in T that have outcome Oi of the chosen test. The decision tree for T consists of a decision node identifying the test, and one branch for each possible outcome. The same tree building machinery is applied recursively to each subset of training cases.

- Case 3 T containes no cases. the decision tree for T is a leaf, but the class to be asociated with the leaf must be determined from information other than T. For example, C4.5 chosses this to be the most frequent class at the parent of this node.

Parallel approaches

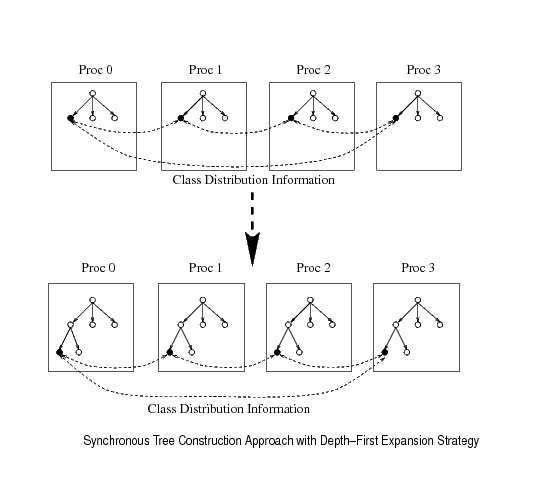

Syncronous Tree Construction - Depth First Expansion Strategy === [the one that we implemented]

In this approach, all processors construct a decision tree syncronously by sending and receiving class distribution information of local data. Major steps for the approach:

- select a node to expand according to a decision tree expansion strategy (eg Depth-First or Breadth-First), and call that node as the current node. At the beginning, root node is selected as the current node

- for each data attribute, collect class distribution information of the local data at the current node

- exchange the local class distribuition information using global reduction among processors

- simultaneously compute the entropy gains of each attribute at each processor and select the best attribute for child node expansion

- depending on the branching factor of the tree desired, create child nodes for the same number of partitions of attributes values, and split training cases accordingly

- repeat above steps until no more nodes are available for the expansion

The figure above shows the overall picture. The advantage of this approach is that it does not require any movement of the training data items. However, this algorithm suffers from high communication cost and load imbalance. For each node in the decision tree, after collecting the class dstribution information, all the processors need to syncronize and exchange the distribution information.

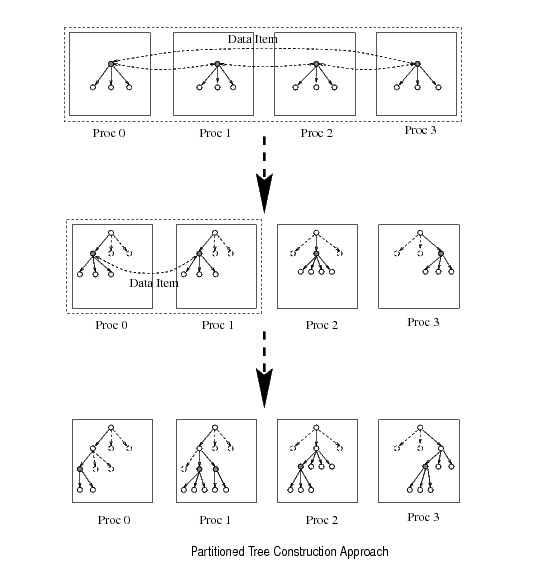

Partitioned Tree Construction

In this approach, whenever feasible, deifferent processors work on different parts of the classification tree. In particular, if more than one processors cooperate to expand a node, then these nodes are partitioned to expand the succesors of this node. Consider the case in wich a group of processors Pn cooperate to expand node n. The algorithm consists of the folowing steps:

Step 1 processors in Pn cooperate to expand node n Step 2 once the node n is expanded in to successors nodes, n1, n2, ..., nk then the processor group Pn is also partitioned, and the successor nodes are assigned to processors as follows:

Case 1: if the number of successor nodes is greater than |Pn|

- partition the successors into |Pn| groups such that the total number of training cases corresponding to each node group is roughly equal. Assign each processor to one node group.

- shufle the training data such that each processor has data items that belong to the nodes it is responsible for.

- now the expansion of the subtrees rooted at a node group proceeds completly independently at each processor as in the serial algorithm

Case 2: otherwise

- assign a subset of processors to each node such that the number of processors assigned to a node is proportional to the number of the training cases corresponding to the node

- shufle the training cases such that each subset of processors has training cases that belongs to the nodes it is responsible for

- processor subsets assigned to different nodes develop subtrees independently.

The advantage of this approach is that once a processor becomes solely responsible for a node, it can develop a subtree of the classification tree independently without any communication overhead. However, there are a number of disadvanteges of this approach. The first disadvantage is that it requires data movement after each node expansion until one processor becomes responsible for an entire subtree. The second disadvantage is poor load balancing inherent in the algorithm.

Project activity

Steps:

- 12 Nov

- Project status: still researching :) - ToDo: chose the best aproach for the serial code that we have

Attachments (6)

-

T.JPG (40.3 KB) - added by 14 years ago.

a small training data set

-

F.JPG (23.3 KB) - added by 14 years ago.

steps in creating the decision tree

-

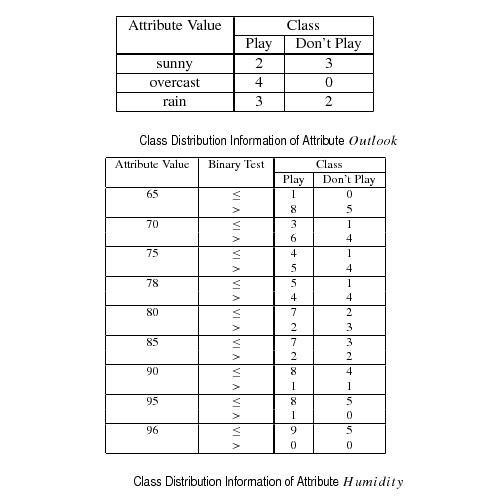

Outlook&Humidity.jpg (44.2 KB) - added by 14 years ago.

Outlook and Humidity attributes

-

SyncronusTreeConstruction-DepthFirstExpansionStrategy.jpg (44.0 KB) - added by 14 years ago.

syncronous treeconstruction

-

PartitionedTreeConstruction.jpg (56.9 KB) - added by 14 years ago.

partitioned tree construction

-

Parallel-DT.ppt (226.0 KB) - added by 14 years ago.

Project Presentation

Download all attachments as: .zip

![(please configure the [header_logo] section in trac.ini)](/trac/PP2009/chrome/site/your_project_logo.png)